Feature Engineering and Training our Model¶

We’ll first setup the glue context in which we can read the glue data catalog, as well as setup some constants.

import sys

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

glueContext = GlueContext(SparkContext.getOrCreate())

database_name = '2019reinventWorkshop'

canonical_table_name = "canonical"

Starting Spark application

| ID | YARN Application ID | Kind | State | Spark UI | Driver log | Current session? |

|---|---|---|---|---|---|---|

| 0 | application_1575125038238_0001 | pyspark | idle | Link | Link | ✔ |

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

SparkSession available as 'spark'.

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

Reading the Data using the Catalog¶

Using the glue context, we can read in the data. This is done by using the glue data catalog and looking up the data

Here we can see there are 500 million records

taxi_data = glueContext.create_dynamic_frame.from_catalog(database=database_name, table_name=canonical_table_name)

print("2018/2019 Taxi Data Count: ", taxi_data.count())

taxi_data.printSchema()

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

2018/2019 Taxi Data Count: 452091095

root

|-- vendorid: string

|-- pickup_datetime: timestamp

|-- dropoff_datetime: timestamp

|-- pulocationid: long

|-- dolocationid: long

|-- type: string

Caching in Spark¶

We’ll use the taxi dataframe a bit repeatitively, so we’ll cache it ehre and show some sample records.

df = taxi_data.toDF().cache()

df.show(10, False)

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+--------+-------------------+-------------------+------------+------------+-----+

|vendorid|pickup_datetime |dropoff_datetime |pulocationid|dolocationid|type |

+--------+-------------------+-------------------+------------+------------+-----+

|null |null |null |null |null |green|

|null |2018-01-01 00:18:50|2018-01-01 00:24:39|null |null |green|

|null |2018-01-01 00:30:26|2018-01-01 00:46:42|null |null |green|

|null |2018-01-01 00:07:25|2018-01-01 00:19:45|null |null |green|

|null |2018-01-01 00:32:40|2018-01-01 00:33:41|null |null |green|

|null |2018-01-01 00:32:40|2018-01-01 00:33:41|null |null |green|

|null |2018-01-01 00:38:35|2018-01-01 01:08:50|null |null |green|

|null |2018-01-01 00:18:41|2018-01-01 00:28:22|null |null |green|

|null |2018-01-01 00:38:02|2018-01-01 00:55:02|null |null |green|

|null |2018-01-01 00:05:02|2018-01-01 00:18:35|null |null |green|

+--------+-------------------+-------------------+------------+------------+-----+

only showing top 10 rows

Removing invalid dates¶

When we originally looked at this data, we saw that it had a lot of bad data in it, and timestamps that were outside the range that are valid. Let’s ensure we are only using the valid records when aggregating and creating our time series.

from pyspark.sql.functions import to_date, lit

from pyspark.sql.types import TimestampType

dates = ("2018-01-01", "2019-07-01")

date_from, date_to = [to_date(lit(s)).cast(TimestampType()) for s in dates]

df = df.where((df.pickup_datetime > date_from) & (df.pickup_datetime < date_to))

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

We need to restructure this so that each time is a single row, and the time series values are in the series, followed by the numerical and categorical features

Creating our time series (from individual records)¶

Right now they are individual records down to the second level, we’ll create a record at the day level for each record and then count/aggregate over those.

Let’s start by adding a ts_resampled column

from pyspark.sql.functions import col, max as max_, min as min_

## day = seconds*minutes*hours

unit = 60 * 60 * 24

epoch = (col("pickup_datetime").cast("bigint") / unit).cast("bigint") * unit

with_epoch = df.withColumn("epoch", epoch)

min_epoch, max_epoch = with_epoch.select(min_("epoch"), max_("epoch")).first()

# Reference range

ref = spark.range(

min_epoch, max_epoch + 1, unit

).toDF("epoch")

resampled_df = (ref

.join(with_epoch, "epoch", "left")

.orderBy("epoch")

.withColumn("ts_resampled", col("epoch").cast("timestamp")))

resampled_df.cache()

resampled_df.show(10, False)

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+----------+--------+-------------------+-------------------+------------+------------+------+-------------------+

|epoch |vendorid|pickup_datetime |dropoff_datetime |pulocationid|dolocationid|type |ts_resampled |

+----------+--------+-------------------+-------------------+------------+------------+------+-------------------+

|1514764800|fhv |2018-01-01 04:01:19|2018-01-01 04:06:54|null |null |fhv |2018-01-01 00:00:00|

|1514764800|null |2018-01-01 10:55:25|2018-01-01 10:57:42|null |null |yellow|2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 03:43:11|2018-01-01 03:53:41|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 04:12:23|2018-01-01 04:36:15|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 05:27:22|2018-01-01 06:01:18|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 04:50:57|2018-01-01 04:56:17|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 04:23:56|2018-01-01 05:17:40|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 17:03:23|2018-01-01 17:33:46|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 17:48:59|2018-01-01 17:58:42|null |null |fhv |2018-01-01 00:00:00|

|1514764800|fhv |2018-01-01 15:57:23|2018-01-01 16:09:00|null |null |fhv |2018-01-01 00:00:00|

+----------+--------+-------------------+-------------------+------------+------------+------+-------------------+

only showing top 10 rows

Creating our time series data¶

You can see now that we are resampling per day the resample column, in which we can now aggregate across.

from pyspark.sql import functions as func

count_per_day_resamples = resampled_df.groupBy(["ts_resampled", "type"]).count()

count_per_day_resamples.cache()

count_per_day_resamples.show(10, False)

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+-------------------+------+------+

|ts_resampled |type |count |

+-------------------+------+------+

|2019-04-10 00:00:00|green |17165 |

|2018-02-21 00:00:00|green |25651 |

|2018-11-11 00:00:00|yellow|257698|

|2019-02-22 00:00:00|fhv |65041 |

|2018-03-15 00:00:00|yellow|348198|

|2018-12-30 00:00:00|fhv |683406|

|2019-03-07 00:00:00|yellow|291098|

|2018-11-28 00:00:00|green |22899 |

|2018-03-05 00:00:00|yellow|290631|

|2018-11-20 00:00:00|yellow|278900|

+-------------------+------+------+

only showing top 10 rows

We restructure it so that each taxi type is it’s own column in the dataset.¶

time_series_df = count_per_day_resamples.groupBy(["ts_resampled"])\

.pivot('type')\

.sum("count").cache()

time_series_df.show(10,False)

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+-------------------+------+-----+------+

|ts_resampled |fhv |green|yellow|

+-------------------+------+-----+------+

|2019-06-18 00:00:00|69383 |15545|242304|

|2018-12-13 00:00:00|822745|24585|308411|

|2019-03-21 00:00:00|47855 |20326|274057|

|2018-09-09 00:00:00|794608|20365|256918|

|2018-01-31 00:00:00|640887|26667|319256|

|2018-08-16 00:00:00|717045|22113|277677|

|2018-03-21 00:00:00|508492|11981|183629|

|2018-09-20 00:00:00|723583|23378|298630|

|2018-05-15 00:00:00|689620|25458|309023|

|2018-12-24 00:00:00|640740|19314|185895|

+-------------------+------+-----+------+

only showing top 10 rows

Local Data Manipulation¶

Now that we an aggregated time series that is much smaller – let’s send this back to the local python environment off the spark cluster on Glue.

%%spark -o time_series_df

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

We are in the local panda/python environment now¶

%%local

import pandas as pd

print(time_series_df.dtypes)

time_series_df = time_series_df.set_index('ts_resampled', drop=True)

time_series_df = time_series_df.sort_index()

time_series_df.head()

ts_resampled datetime64[ns]

fhv int64

green int64

yellow int64

dtype: object

VBox(children=(HBox(children=(HTML(value='Type:'), Button(description='Table', layout=Layout(width='70px'), st…

Output()

We’ll create the training window next, We are going to predict the next week¶

%%local

## number of time-steps that the model is trained to predict

prediction_length = 14

n_weeks = 4

end_training = time_series_df.index[-n_weeks*prediction_length]

print('end training time', end_training)

time_series = []

for ts in time_series_df.columns:

time_series.append(time_series_df[ts])

time_series_training = []

for ts in time_series_df.columns:

time_series_training.append(time_series_df.loc[:end_training][ts])

end training time 2019-05-06 00:00:00

We’ll install matplotlib in the local kernel to visualize this.¶

%%local

!pip install matplotlib > /dev/null

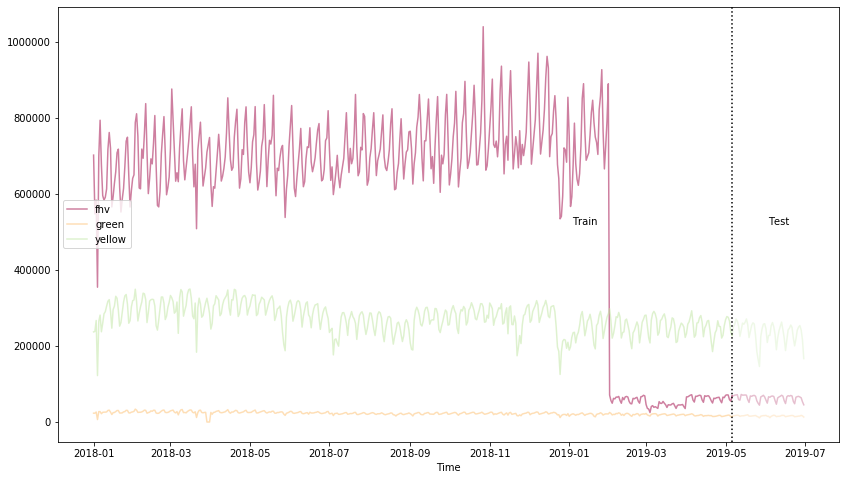

Visualizing the training and test dataset:¶

In this next cell, we can see how the training and test datasets are split up. Since this is time series, we don’t do a random split, instead, we look at how far in the future we are predicting and using that a a knob.

%%local

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

#cols_float = time_series_df.drop(['pulocationid', 'dolocationid'], axis=1).columns

cols_float = time_series_df.columns

cmap = matplotlib.cm.get_cmap('Spectral')

colors = cmap(np.arange(0,len(cols_float))/len(cols_float))

plt.figure(figsize=[14,8]);

for c in range(len(cols_float)):

plt.plot(time_series_df.loc[:end_training][cols_float[c]], alpha=0.5, color=colors[c], label=cols_float[c]);

plt.legend(loc='center left');

for c in range(len(cols_float)):

plt.plot(time_series_df.loc[end_training:][cols_float[c]], alpha=0.25, color=colors[c], label=None);

plt.axvline(x=end_training, color='k', linestyle=':');

plt.text(time_series_df.index[int((time_series_df.shape[0]-n_weeks*prediction_length)*0.75)], time_series_df.max().max()/2, 'Train');

plt.text(time_series_df.index[time_series_df.shape[0]-int(n_weeks*prediction_length/2)], time_series_df.max().max()/2, 'Test');

plt.xlabel('Time');

plt.show()

Cleaning our Time Series¶

FHV still has the issue – the time series drops when the law is in place.

we still need to pull in the FHV HV dataset starting in Feb. This represents the rideshare apps going to a difference licence type under the NYC TLC.

## we are running back on spark now

fhvhv_data = glueContext.create_dynamic_frame.from_catalog(database=database_name, table_name="fhvhv")

fhvhv_df = fhvhv_data.toDF().cache()

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

Let’s filter the time range just in case we have additional bad records here.¶

fhvhv_df = fhvhv_df.where((fhvhv_df.pickup_datetime > date_from) & (fhvhv_df.pickup_datetime < date_to)).cache()

from pyspark.sql.functions import to_timestamp

fhvhv_df = fhvhv_df.withColumn("pickup_datetime", to_timestamp("pickup_datetime", "yyyy-MM-dd HH:mm:ss"))

fhvhv_df.show(5, False)

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+-----------------+--------------------+-------------------+-------------------+------------+------------+-------+

|hvfhs_license_num|dispatching_base_num|pickup_datetime |dropoff_datetime |pulocationid|dolocationid|sr_flag|

+-----------------+--------------------+-------------------+-------------------+------------+------------+-------+

|HV0003 |B02867 |2019-02-01 00:05:18|2019-02-01 00:14:57|245 |251 |null |

|HV0003 |B02879 |2019-02-01 00:41:29|2019-02-01 00:49:39|216 |197 |null |

|HV0005 |B02510 |2019-02-01 00:51:34|2019-02-01 01:28:29|261 |234 |null |

|HV0005 |B02510 |2019-02-01 00:03:51|2019-02-01 00:07:16|87 |87 |null |

|HV0005 |B02510 |2019-02-01 00:09:44|2019-02-01 00:39:56|87 |198 |null |

+-----------------+--------------------+-------------------+-------------------+------------+------------+-------+

only showing top 5 rows

Let’s first create our rollup column for the time resampling¶

from pyspark.sql.functions import col, max as max_, min as min_

## day = seconds*minutes*hours

unit = 60 * 60 * 24

epoch = (col("pickup_datetime").cast("bigint") / unit).cast("bigint") * unit

with_epoch = fhvhv_df.withColumn("epoch", epoch)

min_epoch, max_epoch = with_epoch.select(min_("epoch"), max_("epoch")).first()

ref = spark.range(

min_epoch, max_epoch + 1, unit

).toDF("epoch")

resampled_fhvhv_df = (ref

.join(with_epoch, "epoch", "left")

.orderBy("epoch")

.withColumn("ts_resampled", col("epoch").cast("timestamp")))

resampled_fhvhv_df = resampled_fhvhv_df.cache()

resampled_fhvhv_df.show(10, False)

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+----------+-----------------+--------------------+-------------------+-------------------+------------+------------+-------+-------------------+

|epoch |hvfhs_license_num|dispatching_base_num|pickup_datetime |dropoff_datetime |pulocationid|dolocationid|sr_flag|ts_resampled |

+----------+-----------------+--------------------+-------------------+-------------------+------------+------------+-------+-------------------+

|1548979200|HV0003 |B02867 |2019-02-01 00:05:18|2019-02-01 00:14:57|245 |251 |null |2019-02-01 00:00:00|

|1548979200|HV0003 |B02879 |2019-02-01 00:41:29|2019-02-01 00:49:39|216 |197 |null |2019-02-01 00:00:00|

|1548979200|HV0005 |B02510 |2019-02-01 00:51:34|2019-02-01 01:28:29|261 |234 |null |2019-02-01 00:00:00|

|1548979200|HV0005 |B02510 |2019-02-01 00:03:51|2019-02-01 00:07:16|87 |87 |null |2019-02-01 00:00:00|

|1548979200|HV0005 |B02510 |2019-02-01 00:09:44|2019-02-01 00:39:56|87 |198 |null |2019-02-01 00:00:00|

|1548979200|HV0005 |B02510 |2019-02-01 00:59:55|2019-02-01 01:06:28|198 |198 |1 |2019-02-01 00:00:00|

|1548979200|HV0005 |B02510 |2019-02-01 00:12:06|2019-02-01 00:42:13|161 |148 |null |2019-02-01 00:00:00|

|1548979200|HV0005 |B02510 |2019-02-01 00:45:35|2019-02-01 01:14:56|148 |21 |null |2019-02-01 00:00:00|

|1548979200|HV0003 |B02867 |2019-02-01 00:10:48|2019-02-01 00:20:23|226 |260 |null |2019-02-01 00:00:00|

|1548979200|HV0003 |B02867 |2019-02-01 00:32:32|2019-02-01 00:40:25|7 |223 |null |2019-02-01 00:00:00|

+----------+-----------------+--------------------+-------------------+-------------------+------------+------------+-------+-------------------+

only showing top 10 rows

Create our Time Series now¶

from pyspark.sql import functions as func

count_per_day_resamples = resampled_fhvhv_df.groupBy(["ts_resampled"]).count()

count_per_day_resamples.cache()

count_per_day_resamples.show(10, False)

fhvhv_timeseries_df = count_per_day_resamples

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

+-------------------+------+

|ts_resampled |count |

+-------------------+------+

|2019-06-18 00:00:00|692171|

|2019-03-21 00:00:00|809819|

|2019-05-03 00:00:00|815626|

|2019-05-12 00:00:00|857727|

|2019-04-25 00:00:00|689853|

|2019-03-10 00:00:00|812902|

|2019-04-30 00:00:00|655312|

|2019-06-26 00:00:00|663954|

|2019-06-06 00:00:00|682378|

|2019-02-06 00:00:00|663516|

+-------------------+------+

only showing top 10 rows

Now we bring this new time series back locally to join it w/ the existing one.

%%spark -o fhvhv_timeseries_df

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

FloatProgress(value=0.0, bar_style='info', description='Progress:', layout=Layout(height='25px', width='50%'),…

We rename the count column to be fhvhv so we can join it w/ the other dataframe¶

%%local

fhvhv_timeseries_df = fhvhv_timeseries_df.rename(columns={"count": "fhvhv"})

fhvhv_timeseries_df = fhvhv_timeseries_df.set_index('ts_resampled', drop=True)

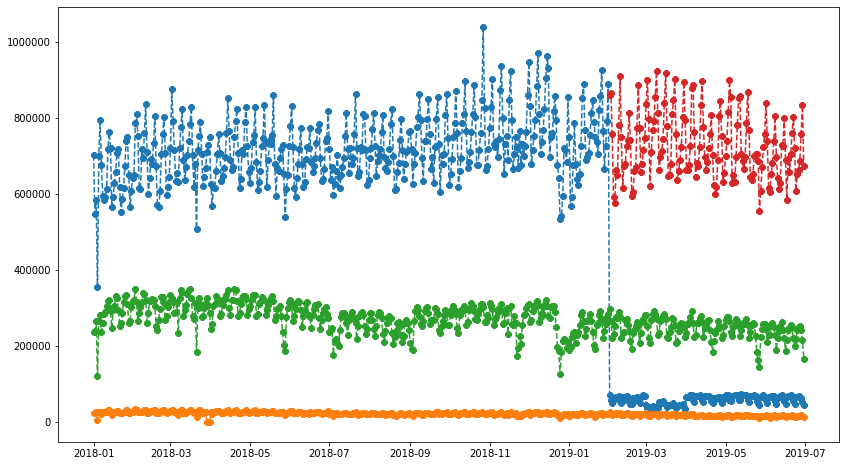

Visualizing all the time series data¶

When we look at the FHVHV dataset starting in Feb 1st, you can see the time series looks normal and there isn’t a giant drop in the dataset on that day.

%%local

plt.figure(figsize=[14,8]);

plt.plot(time_series_df.join(fhvhv_timeseries_df), marker='8', linestyle='--')

[<matplotlib.lines.Line2D at 0x7f31f90e32e8>,

<matplotlib.lines.Line2D at 0x7f31f90aa518>,

<matplotlib.lines.Line2D at 0x7f31f90aa6d8>,

<matplotlib.lines.Line2D at 0x7f31f90aa828>]

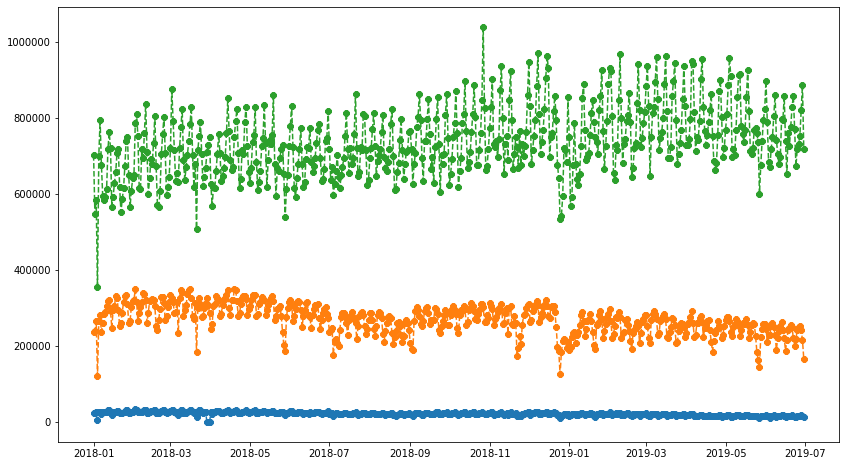

But now we need to combine the FHV and FHVHV dataset¶

Let’s create a new dataset and call it full_fhv meaning both for-hire-vehicles and for-hire-vehicles high volume.

%%local

full_timeseries = time_series_df.join(fhvhv_timeseries_df)

full_timeseries = full_timeseries.fillna(0)

full_timeseries['full_fhv'] = full_timeseries['fhv'] + full_timeseries['fhvhv']

full_timeseries = full_timeseries.drop(['fhv', 'fhvhv'], axis=1)

full_timeseries = full_timeseries.fillna(0)

Visualizing the joined dataset¶

%%local

plt.figure(figsize=[14,8]);

plt.plot(full_timeseries, marker='8', linestyle='--')

[<matplotlib.lines.Line2D at 0x7f31f9064080>,

<matplotlib.lines.Line2D at 0x7f31f9027780>,

<matplotlib.lines.Line2D at 0x7f31f9027860>]

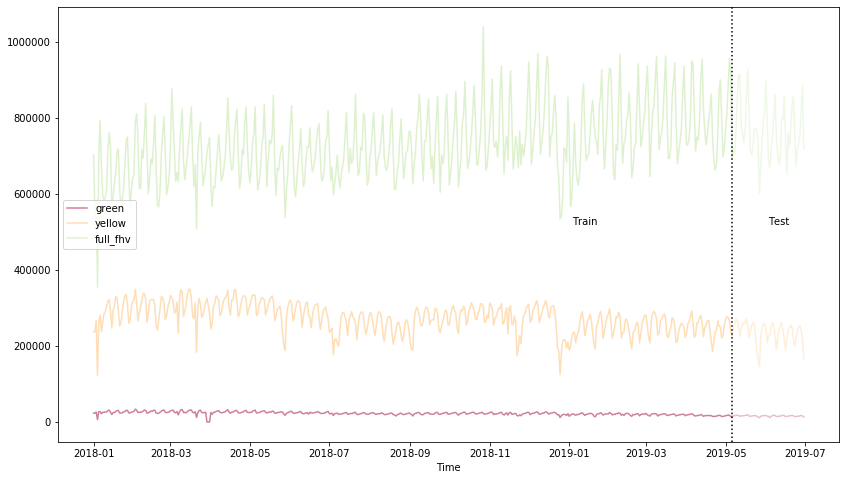

Looking at the training/test split now¶

%%local

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

#cols_float = time_series_df.drop(['pulocationid', 'dolocationid'], axis=1).columns

cols_float = full_timeseries.columns

cmap = matplotlib.cm.get_cmap('Spectral')

colors = cmap(np.arange(0,len(cols_float))/len(cols_float))

plt.figure(figsize=[14,8]);

for c in range(len(cols_float)):

plt.plot(full_timeseries.loc[:end_training][cols_float[c]], alpha=0.5, color=colors[c], label=cols_float[c]);

plt.legend(loc='center left');

for c in range(len(cols_float)):

plt.plot(full_timeseries.loc[end_training:][cols_float[c]], alpha=0.25, color=colors[c], label=None);

plt.axvline(x=end_training, color='k', linestyle=':');

plt.text(full_timeseries.index[int((full_timeseries.shape[0]-n_weeks*prediction_length)*0.75)], full_timeseries.max().max()/2, 'Train');

plt.text(full_timeseries.index[full_timeseries.shape[0]-int(n_weeks*prediction_length/2)], full_timeseries.max().max()/2, 'Test');

plt.xlabel('Time');

plt.show()

%%local

import json

import boto3

end_training = full_timeseries.index[-n_weeks*prediction_length]

print('end training time', end_training)

time_series = []

for ts in full_timeseries.columns:

time_series.append(full_timeseries[ts])

time_series_training = []

for ts in full_timeseries.columns:

time_series_training.append(full_timeseries.loc[:end_training][ts])

import sagemaker

sagemaker_session = sagemaker.Session()

bucket = sagemaker_session.default_bucket()

key_prefix = '2019workshop-deepar/'

s3_client = boto3.client('s3')

def series_to_obj(ts, cat=None):

obj = {"start": str(ts.index[0]), "target": list(ts)}

if cat:

obj["cat"] = cat

return obj

def series_to_jsonline(ts, cat=None):

return json.dumps(series_to_obj(ts, cat))

encoding = "utf-8"

data = ''

for ts in time_series_training:

data = data + series_to_jsonline(ts)

data = data + '\n'

s3_client.put_object(Body=data.encode(encoding), Bucket=bucket, Key=key_prefix + 'data/train/train.json')

data = ''

for ts in time_series:

data = data + series_to_jsonline(ts)

data = data + '\n'

s3_client.put_object(Body=data.encode(encoding), Bucket=bucket, Key=key_prefix + 'data/test/test.json')

end training time 2019-05-06 00:00:00

{'ResponseMetadata': {'RequestId': '080F10A207131EEC',

'HostId': 'QunHqencw40NUjnNNHS/tFdSLN45HBmNRNPG2VNRqUxbZAZV3gg1Yc5caHB1IN+bl0VnnLNinaY=',

'HTTPStatusCode': 200,

'HTTPHeaders': {'x-amz-id-2': 'QunHqencw40NUjnNNHS/tFdSLN45HBmNRNPG2VNRqUxbZAZV3gg1Yc5caHB1IN+bl0VnnLNinaY=',

'x-amz-request-id': '080F10A207131EEC',

'date': 'Sat, 30 Nov 2019 16:26:35 GMT',

'etag': '"3d0c723b9f128d637f003391b7546c16"',

'content-length': '0',

'server': 'AmazonS3'},

'RetryAttempts': 0},

'ETag': '"3d0c723b9f128d637f003391b7546c16"'}

Setting our data and output locations¶

%%local

import boto3

import s3fs

import sagemaker

from sagemaker import get_execution_role

sagemaker_session = sagemaker.Session()

role = get_execution_role()

s3_data_path = "{}/{}data".format(bucket, key_prefix)

s3_output_path = "{}/{}output".format(bucket, key_prefix)

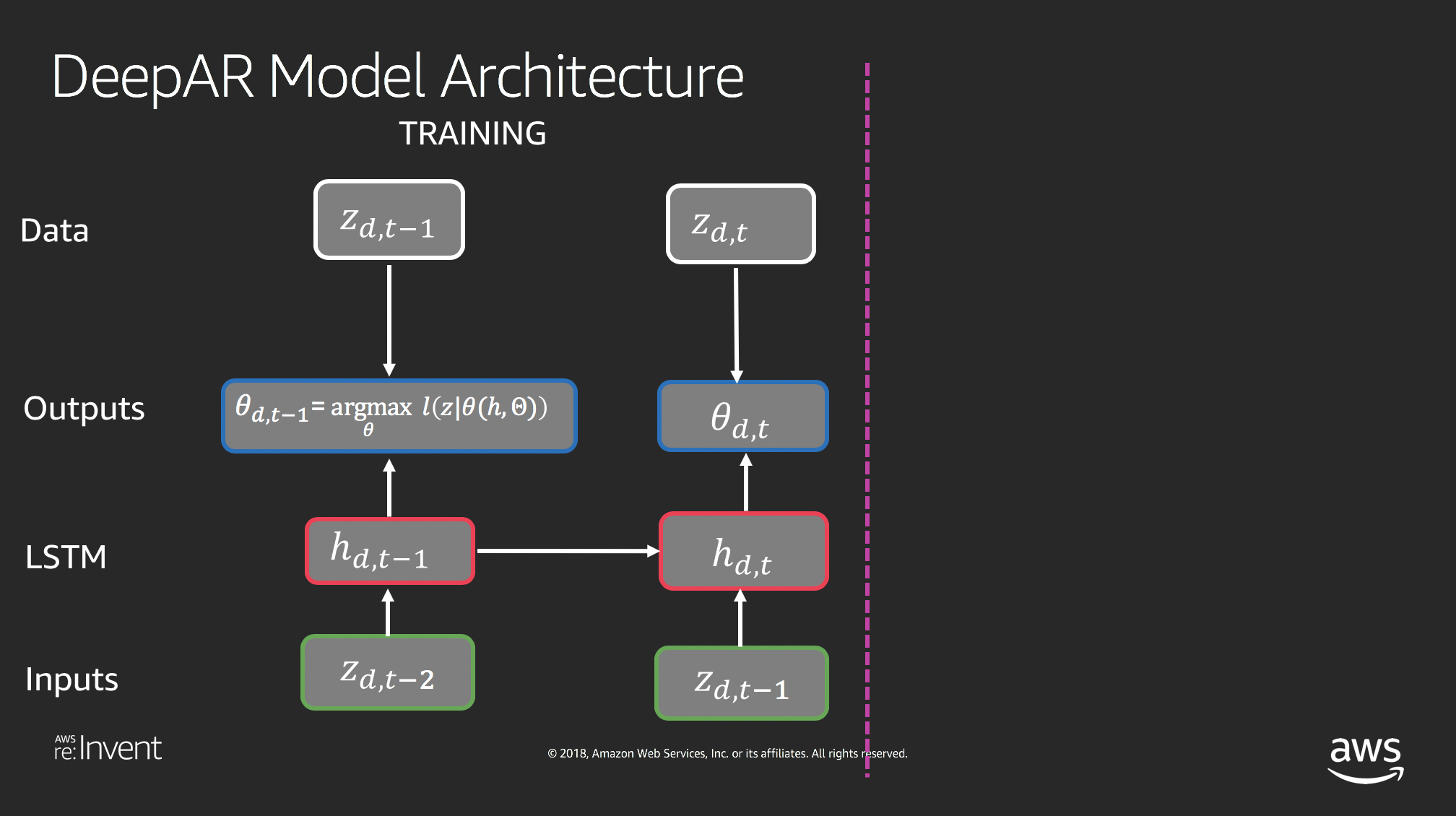

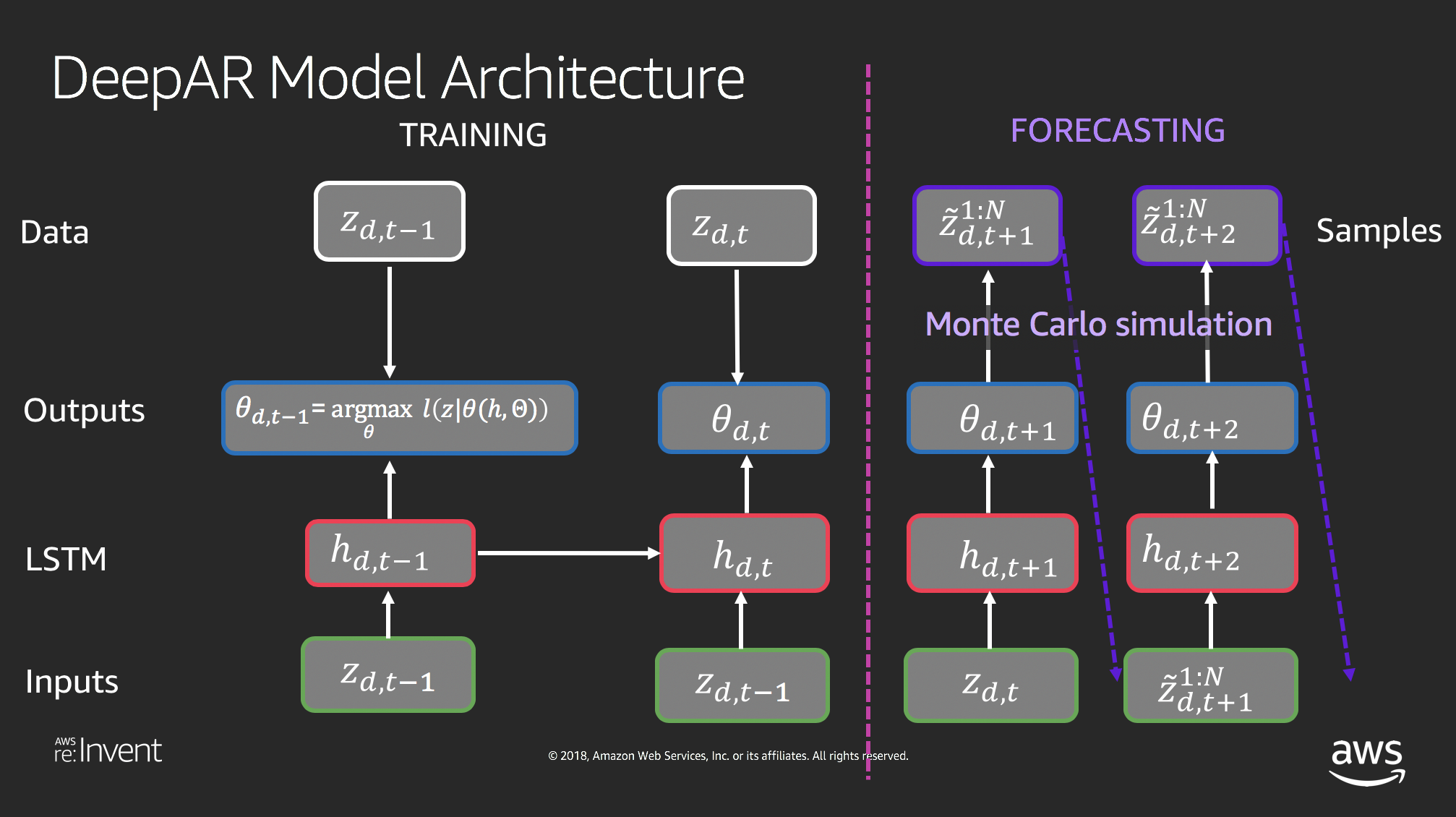

Setting up the DeepAR Algorithm settings¶

%%local

region = sagemaker_session.boto_region_name

image_name = sagemaker.amazon.amazon_estimator.get_image_uri(region, "forecasting-deepar", "latest")

estimator = sagemaker.estimator.Estimator(

sagemaker_session=sagemaker_session,

image_name=image_name,

role=role,

train_instance_count=1,

train_instance_type='ml.c4.2xlarge',

base_job_name='DeepAR-forecast-taxidata',

output_path="s3://" + s3_output_path

)

## context_length = The number of time-points that the model gets to see before making the prediction.

context_length = 14

hyperparameters = {

"time_freq": "D",

"context_length": str(context_length),

"prediction_length": str(prediction_length),

"num_cells": "40",

"num_layers": "3",

"likelihood": "gaussian",

"epochs": "100",

"mini_batch_size": "32",

"learning_rate": "0.001",

"dropout_rate": "0.05",

"early_stopping_patience": "10"

}

estimator.set_hyperparameters(**hyperparameters)

Kicking off the training¶

%%local

estimator.fit(inputs={

"train": "s3://{}/train/".format(s3_data_path),

"test": "s3://{}/test/".format(s3_data_path)

})

2019-11-30 16:26:45 Starting - Starting the training job...

2019-11-30 16:27:13 Starting - Launching requested ML instances.........

2019-11-30 16:28:18 Starting - Preparing the instances for training...

2019-11-30 16:29:07 Downloading - Downloading input data...

2019-11-30 16:29:41 Training - Training image download completed. Training in progress..[31mArguments: train[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Reading default configuration from /opt/amazon/lib/python.7/site-packages/algorithm/resources/default-input.json: {u'num_dynamic_feat': u'auto', u'dropout_rate': u'0.10', u'mini_batch_size': u'128', u'test_quantiles': u'[0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]', u'_tuning_objective_metric': u'', u'_num_gpus': u'auto', u'num_eval_samples': u'100', u'learning_rate': u'0.001', u'num_cells': u'40', u'num_layers': u'2', u'embedding_dimension': u'10', u'_kvstore': u'auto', u'_num_kv_servers': u'auto', u'cardinality': u'auto', u'likelihood': u'student-t', u'early_stopping_patience': u''}[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Reading provided configuration from /opt/ml/input/config/hyperparameters.json: {u'dropout_rate': u'0.05', u'learning_rate': u'0.001', u'num_cells': u'40', u'prediction_length': u'14', u'epochs': u'100', u'time_freq': u'D', u'context_length': u'14', u'num_layers': u'3', u'mini_batch_size': u'32', u'likelihood': u'gaussian', u'early_stopping_patience': u'10'}[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Final configuration: {u'dropout_rate': u'0.05', u'test_quantiles': u'[0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9]', u'_tuning_objective_metric': u'', u'num_eval_samples': u'100', u'learning_rate': u'0.001', u'num_layers': u'3', u'epochs': u'100', u'embedding_dimension': u'10', u'num_cells': u'40', u'_num_kv_servers': u'auto', u'mini_batch_size': u'32', u'likelihood': u'gaussian', u'num_dynamic_feat': u'auto', u'cardinality': u'auto', u'_num_gpus': u'auto', u'prediction_length': u'14', u'time_freq': u'D', u'context_length': u'14', u'_kvstore': u'auto', u'early_stopping_patience': u'10'}[0m

[31mProcess 1 is a worker.[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Detected entry point for worker worker[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Using early stopping with patience 10[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] [cardinality=auto] cat field was NOT found in the file /opt/ml/input/data/train/train.json and will NOT be used for training.[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] [num_dynamic_feat=auto] dynamic_feat field was NOT found in the file /opt/ml/input/data/train/train.json and will NOT be used for training.[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Training set statistics:[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Integer time series[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] number of time series: 3[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] number of observations: 1473[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] mean target length: 491[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] min/mean/max target: 5.0/342890.394433/1039874.0[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] mean abs(target): 342890.394433[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] contains missing values: no[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Small number of time series. Doing 10 number of passes over dataset per epoch.[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Test set statistics:[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Integer time series[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] number of time series: 3[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] number of observations: 1638[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] mean target length: 546[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] min/mean/max target: 5.0/342546.620269/1039874.0[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] mean abs(target): 342546.620269[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] contains missing values: no[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] nvidia-smi took: 0.0251910686493 secs to identify 0 gpus[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Number of GPUs being used: 0[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Create Store: local[0m

[31m#metrics {"Metrics": {"get_graph.time": {"count": 1, "max": 72.59106636047363, "sum": 72.59106636047363, "min": 72.59106636047363}}, "EndTime": 1575131383.872348, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131383.79892}

[0m

[31m[11/30/2019 16:29:43 INFO 140657760761664] Number of GPUs being used: 0[0m

[31m#metrics {"Metrics": {"initialize.time": {"count": 1, "max": 179.97288703918457, "sum": 179.97288703918457, "min": 179.97288703918457}}, "EndTime": 1575131383.979019, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131383.872428}

[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[0] Batch[0] avg_epoch_loss=13.084117[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=0, batch=0 train loss <loss>=13.0841169357[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[0] Batch[5] avg_epoch_loss=12.561649[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=0, batch=5 train loss <loss>=12.5616491636[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[0] Batch [5]#011Speed: 1082.40 samples/sec#011loss=12.561649[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] processed a total of 304 examples[0m

[31m#metrics {"Metrics": {"epochs": {"count": 1, "max": 100, "sum": 100.0, "min": 100}, "update.time": {"count": 1, "max": 438.4138584136963, "sum": 438.4138584136963, "min": 438.4138584136963}}, "EndTime": 1575131384.417562, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131383.97908}

[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=693.261703397 records/second[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #progress_metric: host=algo-1, completed 1 % of epochs[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=0, train loss <loss>=12.4054102898[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_69c66750-0a52-494a-838b-eff95388ff56-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 14.506101608276367, "sum": 14.506101608276367, "min": 14.506101608276367}}, "EndTime": 1575131384.432697, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131384.417626}

[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[1] Batch[0] avg_epoch_loss=11.543509[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=1, batch=0 train loss <loss>=11.5435094833[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[1] Batch[5] avg_epoch_loss=11.853811[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=1, batch=5 train loss <loss>=11.8538109461[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[1] Batch [5]#011Speed: 1200.96 samples/sec#011loss=11.853811[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] processed a total of 303 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 313.37714195251465, "sum": 313.37714195251465, "min": 313.37714195251465}}, "EndTime": 1575131384.746183, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131384.432759}

[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=966.559590765 records/second[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #progress_metric: host=algo-1, completed 2 % of epochs[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=1, train loss <loss>=11.612717247[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_180ffbc0-0b42-4349-aeb2-3dd997e411ee-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 14.13583755493164, "sum": 14.13583755493164, "min": 14.13583755493164}}, "EndTime": 1575131384.760916, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131384.746256}

[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[2] Batch[0] avg_epoch_loss=11.307412[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=2, batch=0 train loss <loss>=11.3074121475[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[2] Batch[5] avg_epoch_loss=11.342184[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] #quality_metric: host=algo-1, epoch=2, batch=5 train loss <loss>=11.3421843847[0m

[31m[11/30/2019 16:29:44 INFO 140657760761664] Epoch[2] Batch [5]#011Speed: 1186.24 samples/sec#011loss=11.342184[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[2] Batch[10] avg_epoch_loss=11.268009[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=2, batch=10 train loss <loss>=11.1789993286[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[2] Batch [10]#011Speed: 1131.09 samples/sec#011loss=11.178999[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] processed a total of 328 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 351.1309623718262, "sum": 351.1309623718262, "min": 351.1309623718262}}, "EndTime": 1575131385.112164, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131384.760976}

[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=933.823852992 records/second[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #progress_metric: host=algo-1, completed 3 % of epochs[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=2, train loss <loss>=11.2680093592[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_cb8228c2-d13f-4282-a8be-6c0110ee4205-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 13.696908950805664, "sum": 13.696908950805664, "min": 13.696908950805664}}, "EndTime": 1575131385.126428, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131385.112241}

[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[3] Batch[0] avg_epoch_loss=11.775387[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=3, batch=0 train loss <loss>=11.7753868103[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[3] Batch[5] avg_epoch_loss=11.569252[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=3, batch=5 train loss <loss>=11.5692516963[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[3] Batch [5]#011Speed: 1216.54 samples/sec#011loss=11.569252[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] processed a total of 296 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 320.6939697265625, "sum": 320.6939697265625, "min": 320.6939697265625}}, "EndTime": 1575131385.447235, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131385.126488}

[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=922.675933702 records/second[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #progress_metric: host=algo-1, completed 4 % of epochs[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=3, train loss <loss>=11.6022842407[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[4] Batch[0] avg_epoch_loss=11.529972[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=4, batch=0 train loss <loss>=11.5299720764[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[4] Batch[5] avg_epoch_loss=11.531543[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=4, batch=5 train loss <loss>=11.531542778[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[4] Batch [5]#011Speed: 1128.21 samples/sec#011loss=11.531543[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] processed a total of 308 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 336.6720676422119, "sum": 336.6720676422119, "min": 336.6720676422119}}, "EndTime": 1575131385.784423, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131385.447313}

[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=914.529829482 records/second[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #progress_metric: host=algo-1, completed 5 % of epochs[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=4, train loss <loss>=11.496231842[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] Epoch[5] Batch[0] avg_epoch_loss=11.429693[0m

[31m[11/30/2019 16:29:45 INFO 140657760761664] #quality_metric: host=algo-1, epoch=5, batch=0 train loss <loss>=11.429693222[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[5] Batch[5] avg_epoch_loss=11.502197[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=5, batch=5 train loss <loss>=11.5021974246[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[5] Batch [5]#011Speed: 1210.92 samples/sec#011loss=11.502197[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[5] Batch[10] avg_epoch_loss=11.288384[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=5, batch=10 train loss <loss>=11.0318073273[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[5] Batch [10]#011Speed: 1166.00 samples/sec#011loss=11.031807[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] processed a total of 336 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 367.095947265625, "sum": 367.095947265625, "min": 367.095947265625}}, "EndTime": 1575131386.152045, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131385.7845}

[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=915.037687483 records/second[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #progress_metric: host=algo-1, completed 6 % of epochs[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=5, train loss <loss>=11.288383744[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[6] Batch[0] avg_epoch_loss=11.120630[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=6, batch=0 train loss <loss>=11.1206302643[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[6] Batch[5] avg_epoch_loss=11.133206[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=6, batch=5 train loss <loss>=11.1332060496[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[6] Batch [5]#011Speed: 1149.90 samples/sec#011loss=11.133206[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] processed a total of 305 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 314.59808349609375, "sum": 314.59808349609375, "min": 314.59808349609375}}, "EndTime": 1575131386.467154, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131386.15211}

[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=969.142829437 records/second[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #progress_metric: host=algo-1, completed 7 % of epochs[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=6, train loss <loss>=11.1763834[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_bc44f47d-8f44-4ede-b4e3-9e726c1c9b8c-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 14.055013656616211, "sum": 14.055013656616211, "min": 14.055013656616211}}, "EndTime": 1575131386.481823, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131386.46723}

[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[7] Batch[0] avg_epoch_loss=11.204243[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=7, batch=0 train loss <loss>=11.2042427063[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[7] Batch[5] avg_epoch_loss=11.445836[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=7, batch=5 train loss <loss>=11.4458359083[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[7] Batch [5]#011Speed: 1099.40 samples/sec#011loss=11.445836[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] processed a total of 316 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 322.6950168609619, "sum": 322.6950168609619, "min": 322.6950168609619}}, "EndTime": 1575131386.804633, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131386.481883}

[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=978.940348457 records/second[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #progress_metric: host=algo-1, completed 8 % of epochs[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=7, train loss <loss>=11.4341204643[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] Epoch[8] Batch[0] avg_epoch_loss=11.525706[0m

[31m[11/30/2019 16:29:46 INFO 140657760761664] #quality_metric: host=algo-1, epoch=8, batch=0 train loss <loss>=11.5257062912[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[8] Batch[5] avg_epoch_loss=11.321895[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=8, batch=5 train loss <loss>=11.3218946457[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[8] Batch [5]#011Speed: 1002.87 samples/sec#011loss=11.321895[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[8] Batch[10] avg_epoch_loss=10.957104[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=8, batch=10 train loss <loss>=10.5193548203[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[8] Batch [10]#011Speed: 1042.35 samples/sec#011loss=10.519355[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] processed a total of 326 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 383.8310241699219, "sum": 383.8310241699219, "min": 383.8310241699219}}, "EndTime": 1575131387.189002, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131386.8047}

[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=849.088935738 records/second[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #progress_metric: host=algo-1, completed 9 % of epochs[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=8, train loss <loss>=10.9571038159[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_3517a004-cfca-454a-a835-341f5c4e6434-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 21.010875701904297, "sum": 21.010875701904297, "min": 21.010875701904297}}, "EndTime": 1575131387.210623, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131387.189077}

[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[9] Batch[0] avg_epoch_loss=11.336051[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=9, batch=0 train loss <loss>=11.3360509872[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[9] Batch[5] avg_epoch_loss=11.312789[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=9, batch=5 train loss <loss>=11.3127894402[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[9] Batch [5]#011Speed: 1033.45 samples/sec#011loss=11.312789[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[9] Batch[10] avg_epoch_loss=11.353988[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=9, batch=10 train loss <loss>=11.403427124[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[9] Batch [10]#011Speed: 881.09 samples/sec#011loss=11.403427[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] processed a total of 336 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 413.4540557861328, "sum": 413.4540557861328, "min": 413.4540557861328}}, "EndTime": 1575131387.624198, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131387.210689}

[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=812.447549835 records/second[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #progress_metric: host=algo-1, completed 10 % of epochs[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=9, train loss <loss>=11.3539883874[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[10] Batch[0] avg_epoch_loss=10.794859[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=10, batch=0 train loss <loss>=10.7948589325[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[10] Batch[5] avg_epoch_loss=11.195995[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] #quality_metric: host=algo-1, epoch=10, batch=5 train loss <loss>=11.1959945361[0m

[31m[11/30/2019 16:29:47 INFO 140657760761664] Epoch[10] Batch [5]#011Speed: 949.42 samples/sec#011loss=11.195995[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[10] Batch[10] avg_epoch_loss=11.216783[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=10, batch=10 train loss <loss>=11.2417282104[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[10] Batch [10]#011Speed: 899.09 samples/sec#011loss=11.241728[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] processed a total of 350 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 435.26315689086914, "sum": 435.26315689086914, "min": 435.26315689086914}}, "EndTime": 1575131388.060028, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131387.624271}

[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=803.899002688 records/second[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #progress_metric: host=algo-1, completed 11 % of epochs[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=10, train loss <loss>=11.2167825699[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[11] Batch[0] avg_epoch_loss=11.323181[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=11, batch=0 train loss <loss>=11.3231811523[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[11] Batch[5] avg_epoch_loss=11.015685[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=11, batch=5 train loss <loss>=11.0156849225[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[11] Batch [5]#011Speed: 847.25 samples/sec#011loss=11.015685[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[11] Batch[10] avg_epoch_loss=11.156606[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=11, batch=10 train loss <loss>=11.3257108688[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[11] Batch [10]#011Speed: 1025.19 samples/sec#011loss=11.325711[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] processed a total of 333 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 455.63697814941406, "sum": 455.63697814941406, "min": 455.63697814941406}}, "EndTime": 1575131388.516234, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131388.060105}

[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=730.671786467 records/second[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #progress_metric: host=algo-1, completed 12 % of epochs[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=11, train loss <loss>=11.1566058072[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[12] Batch[0] avg_epoch_loss=11.386516[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=12, batch=0 train loss <loss>=11.3865156174[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[12] Batch[5] avg_epoch_loss=11.044365[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=12, batch=5 train loss <loss>=11.044365406[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[12] Batch [5]#011Speed: 1024.15 samples/sec#011loss=11.044365[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[12] Batch[10] avg_epoch_loss=11.160591[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=12, batch=10 train loss <loss>=11.3000627518[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] Epoch[12] Batch [10]#011Speed: 1059.63 samples/sec#011loss=11.300063[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] processed a total of 335 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 406.9790840148926, "sum": 406.9790840148926, "min": 406.9790840148926}}, "EndTime": 1575131388.923674, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131388.516307}

[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=822.915883581 records/second[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #progress_metric: host=algo-1, completed 13 % of epochs[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] #quality_metric: host=algo-1, epoch=12, train loss <loss>=11.1605914723[0m

[31m[11/30/2019 16:29:48 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[13] Batch[0] avg_epoch_loss=11.314456[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=13, batch=0 train loss <loss>=11.314455986[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[13] Batch[5] avg_epoch_loss=11.248668[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=13, batch=5 train loss <loss>=11.2486680349[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[13] Batch [5]#011Speed: 1105.80 samples/sec#011loss=11.248668[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[13] Batch[10] avg_epoch_loss=11.205602[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=13, batch=10 train loss <loss>=11.1539218903[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[13] Batch [10]#011Speed: 995.09 samples/sec#011loss=11.153922[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] processed a total of 348 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 397.4108695983887, "sum": 397.4108695983887, "min": 397.4108695983887}}, "EndTime": 1575131389.321589, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131388.923749}

[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=875.46058706 records/second[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #progress_metric: host=algo-1, completed 14 % of epochs[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=13, train loss <loss>=11.2056016055[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[14] Batch[0] avg_epoch_loss=10.776047[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=14, batch=0 train loss <loss>=10.7760467529[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[14] Batch[5] avg_epoch_loss=10.890142[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=14, batch=5 train loss <loss>=10.890141805[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[14] Batch [5]#011Speed: 1192.29 samples/sec#011loss=10.890142[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[14] Batch[10] avg_epoch_loss=10.867761[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=14, batch=10 train loss <loss>=10.8409036636[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[14] Batch [10]#011Speed: 1086.88 samples/sec#011loss=10.840904[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] processed a total of 328 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 359.12179946899414, "sum": 359.12179946899414, "min": 359.12179946899414}}, "EndTime": 1575131389.681233, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131389.321647}

[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=913.043344833 records/second[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #progress_metric: host=algo-1, completed 15 % of epochs[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=14, train loss <loss>=10.8677608317[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_0fec42b3-c584-4660-9bba-d7bcd3a29e84-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 17.184019088745117, "sum": 17.184019088745117, "min": 17.184019088745117}}, "EndTime": 1575131389.69897, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131389.681311}

[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[15] Batch[0] avg_epoch_loss=10.758276[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=15, batch=0 train loss <loss>=10.7582759857[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[15] Batch[5] avg_epoch_loss=11.068750[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] #quality_metric: host=algo-1, epoch=15, batch=5 train loss <loss>=11.0687500636[0m

[31m[11/30/2019 16:29:49 INFO 140657760761664] Epoch[15] Batch [5]#011Speed: 1117.68 samples/sec#011loss=11.068750[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] processed a total of 300 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 329.8060894012451, "sum": 329.8060894012451, "min": 329.8060894012451}}, "EndTime": 1575131390.028907, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131389.699043}

[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=909.302658336 records/second[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #progress_metric: host=algo-1, completed 16 % of epochs[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=15, train loss <loss>=10.9549746513[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[16] Batch[0] avg_epoch_loss=11.169759[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=16, batch=0 train loss <loss>=11.1697587967[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[16] Batch[5] avg_epoch_loss=11.076884[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=16, batch=5 train loss <loss>=11.0768841108[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[16] Batch [5]#011Speed: 1164.08 samples/sec#011loss=11.076884[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] processed a total of 318 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 340.06404876708984, "sum": 340.06404876708984, "min": 340.06404876708984}}, "EndTime": 1575131390.369544, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131390.028989}

[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=934.826960924 records/second[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #progress_metric: host=algo-1, completed 17 % of epochs[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=16, train loss <loss>=10.9921466827[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[17] Batch[0] avg_epoch_loss=11.118506[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=17, batch=0 train loss <loss>=11.1185064316[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[17] Batch[5] avg_epoch_loss=10.985323[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=17, batch=5 train loss <loss>=10.9853231112[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[17] Batch [5]#011Speed: 1146.54 samples/sec#011loss=10.985323[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] processed a total of 309 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 323.4109878540039, "sum": 323.4109878540039, "min": 323.4109878540039}}, "EndTime": 1575131390.693494, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131390.369612}

[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=955.139269164 records/second[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #progress_metric: host=algo-1, completed 18 % of epochs[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=17, train loss <loss>=11.0881063461[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[18] Batch[0] avg_epoch_loss=10.437140[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=18, batch=0 train loss <loss>=10.4371395111[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[18] Batch[5] avg_epoch_loss=11.002823[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] #quality_metric: host=algo-1, epoch=18, batch=5 train loss <loss>=11.0028233528[0m

[31m[11/30/2019 16:29:50 INFO 140657760761664] Epoch[18] Batch [5]#011Speed: 1213.98 samples/sec#011loss=11.002823[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] processed a total of 318 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 339.57600593566895, "sum": 339.57600593566895, "min": 339.57600593566895}}, "EndTime": 1575131391.03361, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131390.693562}

[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=936.147746678 records/second[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #progress_metric: host=algo-1, completed 19 % of epochs[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=18, train loss <loss>=10.9492453575[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[19] Batch[0] avg_epoch_loss=11.144054[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=19, batch=0 train loss <loss>=11.1440544128[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[19] Batch[5] avg_epoch_loss=11.232653[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=19, batch=5 train loss <loss>=11.2326534589[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[19] Batch [5]#011Speed: 1090.45 samples/sec#011loss=11.232653[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[19] Batch[10] avg_epoch_loss=11.190658[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=19, batch=10 train loss <loss>=11.1402643204[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[19] Batch [10]#011Speed: 1222.95 samples/sec#011loss=11.140264[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] processed a total of 335 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 382.02691078186035, "sum": 382.02691078186035, "min": 382.02691078186035}}, "EndTime": 1575131391.416161, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131391.033688}

[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=876.649359433 records/second[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #progress_metric: host=algo-1, completed 20 % of epochs[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=19, train loss <loss>=11.1906583959[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[20] Batch[0] avg_epoch_loss=10.614120[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=20, batch=0 train loss <loss>=10.6141204834[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[20] Batch[5] avg_epoch_loss=10.965454[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=20, batch=5 train loss <loss>=10.9654542605[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[20] Batch [5]#011Speed: 1217.96 samples/sec#011loss=10.965454[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[20] Batch[10] avg_epoch_loss=11.028810[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=20, batch=10 train loss <loss>=11.1048358917[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[20] Batch [10]#011Speed: 973.56 samples/sec#011loss=11.104836[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] processed a total of 333 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 387.83907890319824, "sum": 387.83907890319824, "min": 387.83907890319824}}, "EndTime": 1575131391.804478, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131391.416235}

[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=858.351303134 records/second[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #progress_metric: host=algo-1, completed 21 % of epochs[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=20, train loss <loss>=11.0288095474[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] Epoch[21] Batch[0] avg_epoch_loss=10.710171[0m

[31m[11/30/2019 16:29:51 INFO 140657760761664] #quality_metric: host=algo-1, epoch=21, batch=0 train loss <loss>=10.7101707458[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[21] Batch[5] avg_epoch_loss=10.991527[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=21, batch=5 train loss <loss>=10.9915272395[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[21] Batch [5]#011Speed: 1129.43 samples/sec#011loss=10.991527[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] processed a total of 303 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 352.0510196685791, "sum": 352.0510196685791, "min": 352.0510196685791}}, "EndTime": 1575131392.15703, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131391.804556}

[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=860.387525515 records/second[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #progress_metric: host=algo-1, completed 22 % of epochs[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=21, train loss <loss>=10.8595946312[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_afd195b9-b81b-481a-b83f-fc3da9cc67d7-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 20.630836486816406, "sum": 20.630836486816406, "min": 20.630836486816406}}, "EndTime": 1575131392.178267, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131392.15711}

[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[22] Batch[0] avg_epoch_loss=10.801229[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=22, batch=0 train loss <loss>=10.8012285233[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[22] Batch[5] avg_epoch_loss=10.897065[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=22, batch=5 train loss <loss>=10.8970645269[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[22] Batch [5]#011Speed: 1235.31 samples/sec#011loss=10.897065[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[22] Batch[10] avg_epoch_loss=10.918552[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=22, batch=10 train loss <loss>=10.9443367004[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[22] Batch [10]#011Speed: 1233.26 samples/sec#011loss=10.944337[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] processed a total of 336 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 355.93295097351074, "sum": 355.93295097351074, "min": 355.93295097351074}}, "EndTime": 1575131392.534308, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131392.178325}

[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=943.719874562 records/second[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #progress_metric: host=algo-1, completed 23 % of epochs[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=22, train loss <loss>=10.9185518785[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[23] Batch[0] avg_epoch_loss=10.825591[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=23, batch=0 train loss <loss>=10.8255910873[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[23] Batch[5] avg_epoch_loss=10.959688[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=23, batch=5 train loss <loss>=10.9596881866[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[23] Batch [5]#011Speed: 1207.47 samples/sec#011loss=10.959688[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] processed a total of 304 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 330.36303520202637, "sum": 330.36303520202637, "min": 330.36303520202637}}, "EndTime": 1575131392.865151, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131392.534381}

[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=919.812798202 records/second[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #progress_metric: host=algo-1, completed 24 % of epochs[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=23, train loss <loss>=10.7987992287[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_4d952657-a43f-4806-b881-55f084041e01-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 19.411087036132812, "sum": 19.411087036132812, "min": 19.411087036132812}}, "EndTime": 1575131392.885128, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131392.865255}

[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] Epoch[24] Batch[0] avg_epoch_loss=11.502991[0m

[31m[11/30/2019 16:29:52 INFO 140657760761664] #quality_metric: host=algo-1, epoch=24, batch=0 train loss <loss>=11.5029907227[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[24] Batch[5] avg_epoch_loss=11.037862[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=24, batch=5 train loss <loss>=11.0378623009[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[24] Batch [5]#011Speed: 1136.21 samples/sec#011loss=11.037862[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[24] Batch[10] avg_epoch_loss=11.062090[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=24, batch=10 train loss <loss>=11.0911626816[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[24] Batch [10]#011Speed: 1111.99 samples/sec#011loss=11.091163[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] processed a total of 341 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 378.1099319458008, "sum": 378.1099319458008, "min": 378.1099319458008}}, "EndTime": 1575131393.263366, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131392.885207}

[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=901.606249338 records/second[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #progress_metric: host=algo-1, completed 25 % of epochs[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=24, train loss <loss>=11.0620897466[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[25] Batch[0] avg_epoch_loss=10.812670[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=25, batch=0 train loss <loss>=10.812669754[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[25] Batch[5] avg_epoch_loss=10.899194[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=25, batch=5 train loss <loss>=10.8991940816[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[25] Batch [5]#011Speed: 1145.95 samples/sec#011loss=10.899194[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] processed a total of 312 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 329.3180465698242, "sum": 329.3180465698242, "min": 329.3180465698242}}, "EndTime": 1575131393.593195, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131393.263439}

[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=947.004459947 records/second[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #progress_metric: host=algo-1, completed 26 % of epochs[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=25, train loss <loss>=10.7822431564[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] best epoch loss so far[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Saved checkpoint to "/opt/ml/model/state_9b76b505-ec67-40b3-94df-bb134f1035d5-0000.params"[0m

[31m#metrics {"Metrics": {"state.serialize.time": {"count": 1, "max": 19.988059997558594, "sum": 19.988059997558594, "min": 19.988059997558594}}, "EndTime": 1575131393.613767, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131393.593273}

[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[26] Batch[0] avg_epoch_loss=10.912527[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=26, batch=0 train loss <loss>=10.9125270844[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[26] Batch[5] avg_epoch_loss=10.956731[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=26, batch=5 train loss <loss>=10.9567310015[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[26] Batch [5]#011Speed: 1206.31 samples/sec#011loss=10.956731[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[26] Batch[10] avg_epoch_loss=11.084283[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=26, batch=10 train loss <loss>=11.2373447418[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] Epoch[26] Batch [10]#011Speed: 1168.12 samples/sec#011loss=11.237345[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] processed a total of 323 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 364.0120029449463, "sum": 364.0120029449463, "min": 364.0120029449463}}, "EndTime": 1575131393.977882, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131393.613821}

[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=887.077150741 records/second[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #progress_metric: host=algo-1, completed 27 % of epochs[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] #quality_metric: host=algo-1, epoch=26, train loss <loss>=11.0842827017[0m

[31m[11/30/2019 16:29:53 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] Epoch[27] Batch[0] avg_epoch_loss=11.405628[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #quality_metric: host=algo-1, epoch=27, batch=0 train loss <loss>=11.4056282043[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] Epoch[27] Batch[5] avg_epoch_loss=11.410955[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #quality_metric: host=algo-1, epoch=27, batch=5 train loss <loss>=11.4109549522[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] Epoch[27] Batch [5]#011Speed: 1171.53 samples/sec#011loss=11.410955[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] processed a total of 318 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 337.0828628540039, "sum": 337.0828628540039, "min": 337.0828628540039}}, "EndTime": 1575131394.315439, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131393.977955}

[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=943.111383263 records/second[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #progress_metric: host=algo-1, completed 28 % of epochs[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #quality_metric: host=algo-1, epoch=27, train loss <loss>=11.2308731079[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] loss did not improve[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] Epoch[28] Batch[0] avg_epoch_loss=10.775253[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #quality_metric: host=algo-1, epoch=28, batch=0 train loss <loss>=10.7752532959[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] Epoch[28] Batch[5] avg_epoch_loss=11.065414[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #quality_metric: host=algo-1, epoch=28, batch=5 train loss <loss>=11.0654142698[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] Epoch[28] Batch [5]#011Speed: 1148.01 samples/sec#011loss=11.065414[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] processed a total of 310 examples[0m

[31m#metrics {"Metrics": {"update.time": {"count": 1, "max": 341.9628143310547, "sum": 341.9628143310547, "min": 341.9628143310547}}, "EndTime": 1575131394.657948, "Dimensions": {"Host": "algo-1", "Operation": "training", "Algorithm": "AWS/DeepAR"}, "StartTime": 1575131394.315508}

[0m

[31m[11/30/2019 16:29:54 INFO 140657760761664] #throughput_metric: host=algo-1, train throughput=906.236249471 records/second[0m